The purpose of this review is to highlight space autonomy advances across mission phases, capture the anticipated need for autonomy and associated rationale, assess state of the practice, and share thoughts for future advancements that could lead to a new frontier in space exploration.

Over the past two decades, several autonomous functions and system-level capabilities have been demonstrated and used in spacecraft operations. In spite of that, spacecraft today remain largely reliant on ground in the loop to assess situations and plan next actions, using pre-scripted command sequences. Advances have been made across mission phases including spacecraft navigation; proximity operations; entry, descent, and landing; surface mobility and manipulation; and data handling. But past successful practices may not be sustainable for future exploration. The ability of ground operators to predict the outcome of their plans seriously diminishes when platforms physically interact with planetary bodies, as has been experienced in two decades of Mars surface operations. This results from uncertainties that arise due to limited knowledge, complex physical interaction with the environment, and limitations of associated models.

Robotics and autonomy are synergistic, wherein robotics provides flexibility, autonomy exercises it to more effectively and robustly explore unknown worlds. Such capabilities can be substantially advanced by leveraging the rapid growth in SmallSats, the relative accessibility of near-Earth objects, and the recent increase in launch opportunities.

Avoid common mistakes on your manuscript.

The critical role that robotics and autonomous systems can play in enabling the exploration of planetary surfaces has been projected for many decades and was foreseen by a NASA study group on “Robotics and Machine Intelligence” in 1980 led by Carl Sagan [1]. As of this writing, we are only 2 years away from achieving a continuous robotic presence on Mars for one-quarter century. Orbiters, landers, and rovers have been exploring the Martian surface and subsurface, both at global and local scales, to understand its evolution, topography, climate, geology, and habitability. Robotics has enabled missions to traverse tens of kilometers across the red planet, sample its surface, and place different instruments on numerous targets. However, the planetary exploration of Mars has remained heavily reliant on ground in the loop for its daily operations. The situation is similar for other planetary missions, which are largely operated by a ground crew. A number of technical and programmatic factors play into the degree to which missions can and are able to operate autonomously.

Despite that, autonomy has been used across mission phases including in-space operations, small-body proximity operations, landing, surface contact and interaction, and mobility. Past successful practices may not be sustainable nor scalable for future exploration, which would drive the need for increased autonomy, as we will analyze in this article.

NASA defines autonomy as “the ability of a system to achieve goals while operating independently of external control” [2]. In the NASA parlance, a system is the combination of elements that function together to produce the capability that is required to meet a need. These elements include personnel, hardware, software, equipment, facilities, processes, and procedures needed for this purpose [3]. So, by this definition, an autonomous system may involve a combination of astronauts and machines operating independent of an external entity such as ground control or an orbiting crew. However, in this article, we will only consider autonomy in the context of a robotic spacecraft, where the external actor is ground control. Autonomous robots operated by astronauts in proximity or remotely are outside the scope of this article.

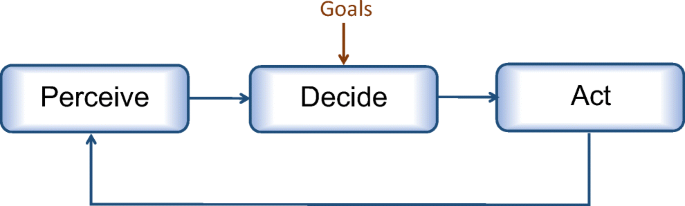

Figure 1 shows the basic abstraction of an autonomous system. With inputs that define the desired objectives or goals, the system perceives its environment and itself (for health monitoring), reasons about them, decides what actions to take, and then executes those actions. The actions affect the system and/or the environment, which impact what would be perceived next. Today’s spacecraft operate largely within the act domain. Perception (except for sensory measurements and rudimentary signal processing) and decision-making are largely performed by personnel on Earth, who also generate commands to be uplinked to the spacecraft to initiate the next set of actions. Autonomous perceptions, decisions, and actions are delegated to the spacecraft in limited cases, when no alternative exists. Onboard autonomy eliminates communication delays, which cause stale state information that ground operators must contend with to close the loop.

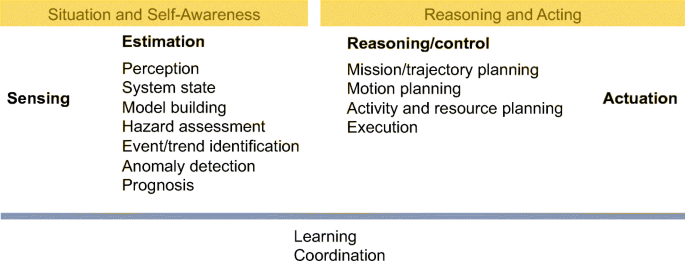

Figure 2 shows the basic abstract functions of an autonomous system for situation and self-awareness as well as for reasoning and acting. Situation and self-awareness require sensing and estimation that encompass perception, system-state estimation, model building, hazard assessment, event and trend identification, anomaly detection, and prognosis. Reasoning and acting encompass planning trajectories/motion (mission), planning and managing the usage of resources, and executing activities. It is also responsible for reconciling conflicting information before execution. Some functions, such as learning and coordination, can be employed across a system and among systems. For example, learning can occur in sensing, estimation, reasoning, and/or acting.

The autonomous functions of a spacecraft are often categorized into two groups: functional level and system level.

Functional-level autonomy is typically focused on specific subsystems and implemented with local state machines and control loops, providing a specific subsystem behavior. These domain-specific functions include perception-rich behaviors for in-space operations such as cruise trajectory corrections and proximity operations, getting to a surface via entry, descent, and landing (EDL), and mobility on/over a surface. They also include physical interaction between two assets, such as in-space spacecraft-to-spacecraft docking, grappling, or assembly as well as reasoning within a science instrument to analyze data and make decisions based on goals.

System-level autonomy reasons across domains: power, thermal communication, guidance, navigation, control, mobility, and manipulation, covering both software and hardware elements. It manages external interactions with ground operators to incorporate goals into current and future activities. It also plans resources and activities (scheduling, prioritizing), executes and monitors activities, and manages the system’s health to handle both nominal and off-nominal situations (fault/failure detection, isolation, diagnosis, prognosis, and repair/response).

An autonomy architecture is a necessary underpinning to properly define, integrate, and orchestrate these functions within a system and support implementations of functions in software, firmware, or hardware. Domain-specific functions have to be architected in a way that allows system-level autonomy to flexibly and consistently manage the various functions within a system, under both nominal and off-nominal situations. Designers have to identify the correct level of abstraction for a given application to define the scope that the system-level autonomy has to reason about. In other words, an integrated autonomous system should not have artificial boundaries not grounded in the fundamentals of the problem to maintain the flexibility that an autonomous system needs. Central to such an architecture is ensuring explicitness of intent, consistency of knowledge in light of faults and failures, completeness (or cognizance of limitations) of the system and behaviors for handling of situations, flexibility in the connectivity of functions to handle failures or degradations, traceability of decisions, robustness and resilience of actions, and cognizance and implications of actions, both in the near term as as well as in the long term. Explorers can or may need to operate for decades, such as the Voyager spacecraft that have been operating since their launch in 1977 [4].

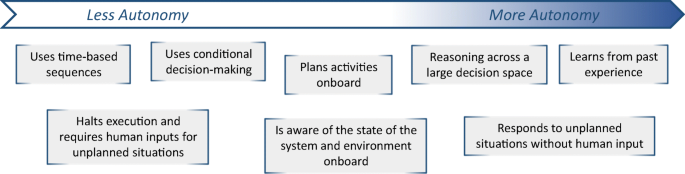

Autonomy that is applied to space systems is a continuum from less autonomy (highly prescriptive tasks and routines) to increasingly autonomous (richer control capabilities onboard) as shown in Fig. 3. Automation is on the “less autonomy” side of the spectrum, which often follows prescribed actions without establishing full situational awareness onboard and without reasoning onboard about the actions the spacecraft undertakes. Such situational awareness and reasoning are handled by operators on the ground.

It is important to note that moving more control onboard does not remove science/humans out of the loop. Rather it changes the role of operators. More autonomy allows a spacecraft to be aware of and, in many cases, make decisions about its environment and its health in order to meet its objectives. That does not preclude ground operators from interacting with the spacecraft asynchronously to communicate intent, provide guidance, or interject to the extent necessary and possible.

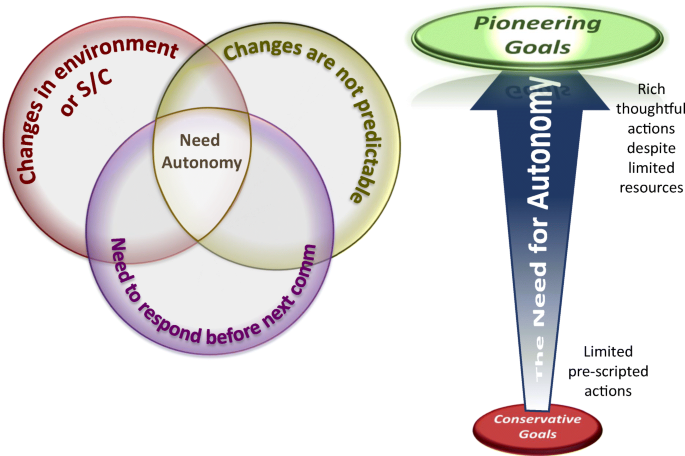

As shown in Fig. 4, there are two sets of constraints that drive the need for autonomy: (1) operational constraints derived from mission objectives and (2) system/environment constraints based on the spacecraft design and the remoteness and harshness of the environment. For the operational constraints, the use of autonomy is traded against non-autonomous approaches. Based on risk and cost, mission objectives may get adjusted, often leaning toward state-of-the-practice non-autonomous approaches wherever possible. These may include scaling back on the minimum required science, which relaxes requirements on productivity or access to more difficult sites. For the system/environment constraints, autonomy is required (not just desired) if the three conditions below are met. These conditions occur when:

Skeptics have argued that due to the risks of deep-space exploration and the rarity of these historic opportunities, it is unlikely that future missions would entrust such critical decisions to an onboard autonomous system. Past missions, such as Cassini, have achieved great success with ground in the loop. Some would argue that the situations that arise are too numerous, complex, and unknown for a machine to reason about, and they are too risky not to rely on a broad range of ground expertise and resources (e.g., compute power). With time delays of only single-digit hours across our solar system, engaging ground operators for these remote missions would both be viable and sensible. This is a reasonable argument and one that has underscored the state of the practice, but it hinges on two assumptions that may no longer hold true in the future: (1) our ability to predict outcomes to a reasonable degree and (2) the availability of adequate resources (e.g., power, time, communication bandwidth, line-of-sight) to have ground in lock-step with the decision-making loop. Consider a Europa surface mission duration, which would be constrained by thermal and power considerations [6]. A mission that needs to collect and transfer samples from an unknown surface in a limited time requires a degree of autonomy to successfully handle its potentially unpredictable surface interaction. A different example is the Intrepid lunar mission concept [7]. With its proximity to Earth, the Moon is a destination that typically would not justify the need for autonomy. However, when you consider the proposed mission objectives that require traversing 1800 km with hundreds of instrument placement across six distinct geological regions in 4 years, such a mission would have to rely on a large degree of autonomy to be successful. A detailed study of this mission concept has shown that communication availability (through DSN as well as projected bandwidths through a commercial communication infrastructure within this decade) would drive the need for largely autonomous operations, with intermittent ground interventions to direct intent and handle anomalies that cannot be resolved onboard.

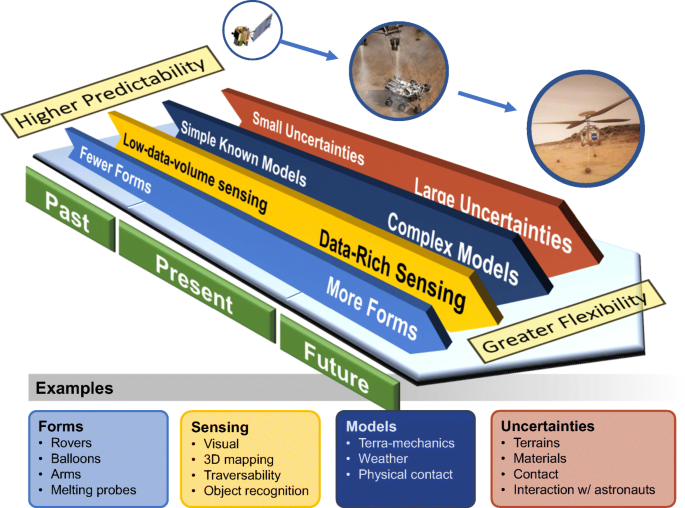

Figure 5 compares trends of past, present, and anticipated future needs. Spanning this time frame, we note a growth in the diversity of forms of robotic explorers: starting with spacecraft on flyby and orbiting missions, to today’s missions that include landers [8], subsurface probes [9], rovers [10], and most recently rotorcraft [11, 12]. Future missions could include even more diverse forms of explorers such as balloons or dirigibles [13], hoppers [14, 15], walkers [16, 17], rappelers [18], deep melt probes [19], mother-daughter platforms, and multi-craft missions [20]. Whereas earlier planetary exploration missions operated in vacuum away from planetary bodies, today’s missions are operating on or into a wide range of planetary surfaces with different properties [9, 21]. The surface and subsurface of such bodies are not well characterized. Scooping and probing in these bodies have proved more challenging than had been anticipated [22,23,24].

Also, today’s missions have spacecraft with much richer sensing and perception than prior missions. Landing systems are equipped with high-resolution cameras and LIDARs for terrain-relative navigation and hazard assessment [25, 26]. The Mars rovers carry tens of visual cameras, often in stereoscopic configurations, to establish situational awareness for ground operations. Interactions with the environment, whether for manipulation, probing, sampling, or mobility, are governed by empirical models that are sensitive to terrain heterogeneity [27].

All this to say that current and future robots are operating and interacting in largely unknown environments, with perception-rich systems but limited physical models that govern their interaction with the environment [28]. While past missions have been successful by relying on their ability to predict the execution of activities days or even weeks in advance based on orbital dynamics, current and future in situ missions will continue to be challenged in their ability to predict outcomes of actions given the complex and incomplete models that govern that dynamic.

In summary, future robots will likely be operating in largely unknown and diverse environments, with perception-rich systems that experience large uncertainties, causing the ability of ground operators to predict behavior to be severely limited. As such, to be successful, we argue that future robotic systems should be more flexible and more autonomous to handle situations that arise. Given this rising complexity, made worse by communication and power constraints, the desire to push the boundaries of exploration will drive the need for more autonomy [29••].

Despite the successful demonstrations and uses of autonomous capabilities on a range of spacecraft, from in-space to surface missions, autonomy only gets adopted when it is absolutely necessary to achieve the mission objectives, such as during EDL on Mars. It is often the case that enhanced productivity does not fare well against a perceived increase in risk. The current posture vis-à-vis the adoption of autonomy is understandable, given the large costs and the rare opportunities afforded to explore such bodies.

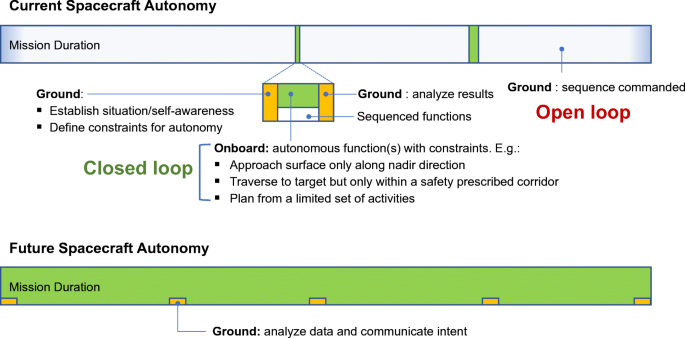

Figure 6 captures an abstract comparison of state of the practice in spacecraft autonomy (see “Autonomous Robotic Capabilities in Past and Current Space Missions” for a summary of advances) relative to a possible future autonomous spacecraft. To date, autonomous capabilities have been deployed either within limited operational windows (green bars) or in scenarios that were carefully modeled a priori and pre-scripted for a deterministic or relatively well-anticipated outcome. Prior to handing control to the autonomous spacecraft (engagement), ground operators use telemetry, physics-based models, and expert judgement to establish situational awareness, even though the spacecraft telemetry may be stale given communication delays and ground-planning durations. As such, these missions have the benefit of ground-in-the-loop engagement to assess situations pre- and post-deployment. This awareness is also used to constrain the autonomous behaviors before engagement. For example, rover navigation is sometimes aided by ground operators’ assessments of safe keep-in-zones and unsafe keep-out-zones from orbital data to constrain the rover’s actions to remain within a relatively safe corridor [30•]. This is akin to a parent guarding their child during their first steps. Spacecraft fault protection is often disabled or restricted during critical events, lest it results in an unexpected course of action that could put the mission at risk [31]. Such state-of-the-practice actions are adopted from successful past missions since they have proven reliable over the years.

Therefore, today’s sequence-driven operations heavily rely on human cognizance to establish situational awareness and command the spacecraft. For most space missions, in particular flyby and orbital, operators are able to plan activities days or weeks in advance because the physics of the problem are well understood and reasonably modeled. Spacecraft that rely on orbital dynamics, such as Galileo and Cassini, were able to execute pre-programmed sequences and manage resources for up to 14 days autonomously (between two scheduled Deep Space Network antenna passes) with unattended operations [32]. This also includes recognition of on-board failures and execution of appropriate recovery operations. Contrasting this with surface operations of the Mars rovers or operations in the vicinity of a small body, the ability to predict outcomes of activities significantly diminishes to short-time horizons. In situ robotic spacecraft operating in poorly constrained or dynamic environments (e.g., Venus, Mars, or Titan) have to rely on local assessments of the situation at hand in order to take action.

The adoption of increasing levels of autonomy faces both technical and non-technical challenges.

Practices that resulted in past successes do not necessarily imply their suitability for future missions, where the spacecraft has to operate in regimes of much higher uncertainties and poorly modeled physics: namely, the physical interaction with unknown and never-before-visited surfaces. For example, Mars missions have had the benefit of a priori knowledge of the Martian atmosphere and surface from prior missions, which were heavily used in developing models for testing the autonomous capabilities of entry, descent, and landing [33] as well as surface navigation [34, 35]. Future missions that would explore unknown worlds need to handle the large uncertainties and the limited a priori knowledge of the environment in which they must operate. The topographies of such surfaces may be unknown at the scale of the platform (e.g., as of this writing, the best available resolution of Europa’s surface is at 6 m/pixel, well below what is necessary for the scale of a future landing platform [36]), and the material properties are often poorly constrained [27] (e.g., the Curiosity rover encountered uncharacteristic terrain with high sinkage in Hidden Valley [37]) or exhibit unexpected behavior (e.g., the Spirit rover unexpectedly broke through a duricrust layer and became embedded and immobilized in unconsolidated fines [38], the Phoenix scooped sample appeared to congeal, possibly as a result of solar radiation impinging on the sample, and cause the sample to stick to the scoop during delivery to the instruments [8]). Future missions would have to operate for extended periods of time depending on available communications and in light of faults and failures that they will inevitably experience during their treacherous exploration of planetary bodies. Being able to adapt and learn how to operate in these harsh environments is becoming an important aspect of deep-space exploration.

The major technical challenges for autonomy are:

Among the non-technical barriers are strategic and programmatic challenges associated with the current acceptable risk posture related to mission cost, the necessary reliance on heritage for competed missions to fit within cost caps and to minimize risk, and the limited demand for autonomy from currently formulated missions, which are often conceived based on state of the practice rather than what could become viable. The current paradigm of ground-in-loop-operations does not scale well to operating multiple coordinated spacecraft in large formations [20]. While the cost of operations would initially be higher for autonomous systems, as the capability gets matured and becomes standard practice, the expected cost would eventually converge to a steady state resulting in potentially substantial reductions in operational cost. Other non-technical challenges are related to changes in people’s roles and a sense of job displacement and loss of control.

Autonomy would enable (a) exploring new destinations with unknown or dynamic environments, (b) increasing productivity of in situ operations, (c) increasing robustness and enabling graceful degradation of spacecraft operation, and (d) reducing operations cost and enhancing operability.

To understand where we are going, it is useful to review in detail where we have been. This section provides an overview of notable prior missions that have contributed to autonomy progress for space applications.

Over the past two decades, the world has witnessed the impact of robotics for the surface exploration of Mars. This includes the first 100 m of Sojourner’s tracks on the red planet, Spirit and Opportunity’s exploration of dramatically different regions in different hemispheres, and Curiosity’s climbing of Mount Sharp. Complementing Spirit and Opportunity’s discovery of evidence that water once flowed on the Martian surface, the Phoenix mission used its robotic arm to sample water ice deposits in the shallow subsurface of the northern polar region. The Curiosity rover has investigated the Martian geology in more detail compared to its predecessors, using its mobility and manipulator to drill and transfer drill cuttings to its instrument suite. It found complex organic molecules in the Martian regolith and detected seasonal fluctuations of low methane concentrations in its atmosphere. Most recently, the InSight mission used its robotic arm to place two European instruments on the Martian surface: a high-precision seismometer that detected the first ever Marsquake and a heat-flow sensing mole intended to penetrate meters below the surface.

In 1999, the Remote Agent Experiment aboard the Deep Space I mission demonstrated goal-directed operations through onboard planning and execution and model-based fault diagnosis and recovery, operating two separate experiments for 2 days and later for 5 consecutive days [43, 44]. The spacecraft demonstrated its ability to respond to high level goals by generating and executing plans on-board the spacecraft, under the watchful eye of model-based fault diagnosis and recovery software. On the same mission, autonomous spacecraft navigation was demonstrated during 3 months of cruise for the 36-month-long mission. It also executed a 30-min autonomous flyby, demonstrating onboard asteroid detection, orbit update, and low-thrust trajectory-correction maneuvers.

In the decade to follow, the Stardust mission demonstrated a similar flyby feat of one asteroid and two comets [45]. Between 2005 and 2010, the Deep Impact mission conducted an autonomous 2-h terminal guidance of a comet impactor and separately a flyby that tracked two comets [46]. It demonstrated detecting the target body, updating the relative orbits, and commanding the spacecraft using low-thrust maneuvers. Autonomy has also been used to aid science operations of Earth-orbiting missions such as the Earth-Observing-1 spacecraft, which used onboard feature and cloud detection to retarget subsequent observations for identifying regions of change or of interest [47]. The IPEX mission used autonomous image acquisition and data processing for downlink [48]. Most recently, the ASTERIA spacecraft transitioned its commanding from time-based sequences to task networks and demonstrated onboard orbit determination using passive imaging in Low Earth Orbit (LEO) without GPS [49].

Operating in proximity of and on small bodies has proven particularly time consuming and challenging. To date, only five missions have attempted to operate for extended periods of time in close proximity to such small bodies: Shoemaker, Rosetta, Hayabusa, Hayabusa2, and OSIRIS-REx [45, 50,49,52]. Many factors make operating around small bodies particularly challenging: the microgravity of such bodies, debris that can be lofted off their surfaces, their irregular topography and correspondent sharp shadows and occlusions, and their unconstrained surface properties. The difficulties of reaching the surface, collecting samples, and returning these samples stem from uncertainties of the unknown environment and the dynamic interaction with a low-gravity body. The deployment and access to the surface by Hayabusa’s MINERVAs [53] and Rosetta’s Philae [54] highlight some of these challenges and together with OSIRIS-REx [55] underscore our limited knowledge of the surface properties. Because of the uncertainty associated with such knowledge, missions to small bodies typically rely on some degree of autonomy.

During entry, descent, and landing (EDL) on Mars, command and control can only occur autonomously due to the communication delay and constraints. Landing on Mars is particularly challenging because of its thin atmosphere and the need to decelerate to a near-zero terminal descent velocity with limited fuel, requiring guided entry for deceleration to velocities where parachutes may be used effectively. Uncertainties arise with parachutes due to wind that contributes to a lateral velocity of the descending spacecraft. As a result, in 2004, the Mars Exploration Rover (MER) missions used onboard autonomy [56] to estimate lateral velocity from descent images and correct it if necessary.

The Chang’e 4 lunar mission carrying the Yutu-2 rover demonstrated high-precision autonomous landing in complex terrain on the lunar far side. The spacecraft used terrain relative navigation as well as hazard assessment and avoidance to land in the absence of radiometric data [57].

Surface contact and interaction is typically needed for instrument placement and sampling operations in scientific exploration. The Mars Exploration Rovers demonstrated autonomous approach and instrument placement on a target selected from several meters away [59, 60]. The OSIRIS-REx mission captured samples from the surface of asteroid Bennu using its 3.4-m extended robotic arm in a touch-and-go maneuver that penetrated to a depth of ~50 cm, well beyond the expected depth for the sample capture.

Surface mobility greatly expands the value of a landed mission by enabling contact and interaction with a broader part of the surface. To achieve surface mobility safely, every Mars rover mission hosted some form of autonomous surface navigation. In 1997, the Sojourner rover of the Mars Pathfinder mission demonstrated autonomous obstacle avoidance using laser striping together with a camera to detect untraversable rocks (positive geometric hazards). It then used its bang-bang control of brushed motors to drive and steer to avoid hazards along its path and reach its designated goal. The Mars Exploration Rovers, Spirit and Opportunity, and the Mars Science Laboratory Curiosity rover used a more sophisticated autonomous navigation algorithm, relying on dense stereo mapping from its body- and mast-mounted cameras to assess terrain hazards. Algorithms processed three-dimensional point clouds into a grid map, estimating the slope, height differences, and roughness of the rover’s footprint across each terrain patch [34].

The Mars 2020 Perseverance rover uses an even more sophisticated algorithm in evaluating a safe traverse path for the rover. It improves the stereo sensing and significantly speeds up its processing using dedicated FPGAs, which will evaluate the tracks of the wheels across the terrain to assess traversability. For path planning, given the computationally intensive calculation of assessing the body-terrain collision when placing a passively articulated rover suspension along the terrain path, a conservative approximation is used to simplify the computation while preserving a safe collision-free path. In addition to the local cost evaluation of the rover’s traverse across the nearby terrain, a global cost is calculated from orbital and previously observed rover data to determine the proper action to take.

The Mars rovers have traversed distances of hundreds of meters autonomously, well beyond what has been visible in imagery used by ground operators (i.e., over the horizon driving). Over one weekend, the Opportunity rover drove 200 m in a multi-sol autonomous driving sequence.

This is only a prelude of what is anticipated. Ongoing mission concept studies and research programs are investigating a range of robotic systems that would explore surfaces of other planetary bodies. These include robotic arms that capture and analyze samples from Europa’s surface. A rotorcraft completed multiple powered flights in the thin Martian atmosphere, through the thin Martian atmosphere, and another large one is being built to explore Titan’s surface, leveraging its thick atmosphere [11, 12]. Probes are being studied to reach the oceans of icy worlds, either getting through kilometers of cryogenic ice or by weaving their way through vents and crevasses of Enceladus’ tiger stripes, the site of plumes in the moon’s southern region [61]. Lunar rovers are being studied to cover thousands of kilometers to explore more disparate regions near the lunar equator [7] and in the polar regions.

These increasingly rich forms of explorers will require a greater degree of autonomy, in particular, for ocean worlds and remote destinations, where the surfaces of target bodies have never been visited before and where communications and power resources are more constrained than those on the Moon and Mars. While such explorers are likely to be heterogeneous in their form, the foundational elements of autonomy might be shared among such platforms. It is precisely these foundational elements that would need to be advanced to enable robotic systems to effectively conduct their complex missions, in spite of the limited knowledge and large uncertainties of the harsh environments to be explored.

Given the aforementioned challenges, how can we take the next major step in advancing autonomy? To do so, we consider the key gap, which is to reliably operate in situ in a partially known and harsh environment and under inevitable system degradations. This would drive the maturation of the needed function- and system-level autonomy capabilities in an integrated architecture to principally handle a range of conditions. A number of such autonomy challenges have been captured from a NASA Autonomy Workshop by the Science Mission Directorate in 2018 [62].

One example that could provide an adequately challenging near-term opportunity for advancing robotics and autonomous systems is using an affordable SmallSat (e.g., a spacecraft that is less than 180 kg and has standardized form factors at smaller sizes: e.g., 6U, 12U) to travel to, approach, land, and operate on the surface of a near-Earth object (NEO) autonomously. The SmallSat would be designed to operate using high-level goals from the ground, which will also provide operational oversight. Frequently asked questions and answers for this concept are discussed below.

Why are NEOs compelling for exploration? The exploration of NEOs is important for four thrusts: science, human exploration, in situ resource utilization, and planetary defense. For example, previous missions, Hayabusa and Hayabusa2, were primarily science focused. They largely operated with ground in the loop and their surface operational capabilities were, therefore, limited at the time. We envision autonomous robotic access to the surface of NEOs that would expand on these successes and would have substantial feed-forward potential to enable access to more remote bodies such as comets, asteroids, centaurs, trans-Neptunian bodies, and Kuiper-belt objects. Small bodies are abundant and diverse in their composition and origin and are found across the solar system and out to the Oort Cloud [50].

Why are NEOs well-suited targets to advance autonomy? NEOs embody many of the challenges that would be representative of even more remote and extreme destinations, while remaining accessible by SmallSats. Given their diversity, their environments are relatively unknown a priori and the interaction of a spacecraft near or onto their surface would be dynamic, given their microgravity. Further, such a mission cannot be easily emulated in a terrestrial analog environment and the utility of simulation is limited by the unknown characteristics of the environment to be encountered.

Why is autonomy enabling for small bodies? Autonomy would enable greater access by reducing operations cost and would scale to allow reaching far more diverse bodies than the current ground-in-the-loop exploration paradigm. With on-board situational awareness, autonomy enables closer flybys, more sophisticated maneuvers during proximity operations, and safe landing and relocating on the surface. Operating near, on, or inside these bodies requires autonomy because of their largely unknown, highly rugged topographies and because of the dynamic nature of the interaction between the spacecraft and the body. Missions such as Hayabusa and Hayabusa2 that deployed surface assets largely operated with ground in the loop and their surface operational capabilities were limited at the time.

Approaching, landing, and reaching designated targets on a NEO requires technical advances in computer vision, machine learning, small spacecraft, and surface mobility. An autonomous mission with limited a priori knowledge of the body would establish, during approach, estimates of the body’s rotation rate, rotation axis, shape, gravity model, local surface topography, and safe landing sites using onboard sensing, computing, and algorithms. An onboard system has the advantage of higher image acquisition rates that would be advantageous for the computer vision algorithms and would result in a much-reduced operations team, when compared to ground operations that would be subject to limited communication bandwidth. Machine learning would be able to encode complex models and handle large uncertainties, such as identifying and tracking body-surface landmarks across large scale changes and varying lighting conditions during tens of thousands of kilometers of approach. Furthermore, machine learning would handle complex dynamic interactions with the surface, whose geo-physical properties are not known a priori, to enable effective mobility and manipulation. Such an autonomous capability, once established, would be more broadly applicable to planetary bodies with unknown motions/rotations, topographies, and atmospheric conditions, should the latter exist.

Such a scenario has clear success metrics for each stage of increasing difficulty. During cruise, trajectory correction maneuvers would guide the craft to the approach point, when the target becomes detectable (subpixel size but appears as point-spread function) in the camera’s narrow field of view. The approach is a particularly challenging phase whose success is reaching a hover point at a safe distance, having established the body parameters (trajectory, rotation, and shape). The subsequent phase would involve the successful landing site selection, guidance, and safe landing. For a NEO, such a maneuver would have the flexibility of an abort and retry given the microgravity of the body. Mobility on the surface to target locations and the ability to manipulate the shallow regolith surface to acquire measurement would constitute the last phase and success metric. While all operations would be autonomously executed, these would be responding to goals set by scientists and ground operators and the performance of the craft would be continually monitored by ground operators as the capability is proven. The last success metric is the download of key information to trace and analyze the onboard decisions that the spacecraft has been making all along.

Results from an earlier analysis of both accessibility and feasibility of such a scenario showed promise [63, 64]. To simplify access to the surface, we design the spacecraft to be able to self-right and operate from any stable state, where it can hop and tumble similar to what has been demonstrated in parabolic flight, but possibly using cold gas thrusters in lieu of reaction wheels [15]. Once on the surface, we assume a limited lifetime to reduce constraints associated with large deployable solar panels. In addition to guiding the spacecraft during landing, micro-thrusters can also be used to relocate the platform to different sites on the body. Miniaturized manipulators developed for CubeSats could enable such a platform to manipulate the surface for sampling and other measurements [65]. Such a scenario could be extended to multi-spacecraft missions.

In addition to the functions that would have to be matured, this scenario would drive the development of an architecture that integrates function- and system-level elements to enable cross-domain models to interact at the proper fidelity levels to execute a full and adequately challenging mission, but with provisions for ground oversight and retries. The relatively low cost of such a technology demonstration would allow a more aggressive risk posture to substantially advance autonomous robotic capabilities. By making the architecture and algorithms widely available, the bar of entry for universities will be lowered, allowing greater opportunities to send SmallSat missions to diverse NEOs.

In this paper, we have provided a broad overview of autonomy advances for robotic spacecraft, summarized state of the practice, identified challenges, and shared potential benefits of greater adoption. We presented an argument for why the state of the practice of sequence-driven, ground-controlled operations that led to numerous successful missions would not be a well-suited paradigm for future exploration, where missions have to operate in situ with physical interactions with the bodies or their atmospheres in poorly constrained environments, with limited a priori knowledge, and under harsh environmental conditions. We examined experiences from missions over the past two decades and highlighted how unanticipated situations arise that current systems are unable to handle without the expertise of ground operators and tools. Future autonomous systems would have to handle a wide range of conditions on their own if they were to operate in more remote destinations. Despite several demonstrations of autonomous capabilities, state of the practice remains largely reliant on ground-in-the-loop operations. The need for autonomy is driven by two main constraints: mission objectives and environmental constraints. Mission objectives are typically set to reduce the need for new technologies but environment constraints would eventually drive the need for autonomy. The adoption of autonomy at a broader scale is not only constrained by technical barriers such as the advancement of the algorithms, the integration of cross-domain capabilities, and the verification and validation of such capabilities; it is also driven by non-technical factors related to acceptable mission risk, cost, and change in the roles of humans interacting with the spacecraft. We articulate why future missions would require more autonomy: our ability to predict the execution of onboard activities seriously diminishes for in situ missions, as evidenced by two decades of Mars surface exploration. We highlight key autonomy advanced across different mission phases: in-space, proximity operations, landed, and surface missions. We conclude by sharing a scenario arguing for sending an autonomous spacecraft to a NEO to approach, land, move, and sample its surface as an adequately challenging scenario to substantially advance both the function-level and system-level autonomy elements. Such a scenario could be matured and demonstrated using SmallSats and has clear success metrics for each mission phase.

As our current missions discover a multitude of planets around other stars, we are compelled to ask ourselves what it would take some day to explore those exoplanets. A mission to perform in situ exploration of even the nearest exoplanetary system is a daunting, yet an exciting challenge. Such a mission will undoubtedly require a sophisticated level of autonomy together with major advances in power, propulsion, and other spacecraft disciplines. This is a small step toward a goal we only dare to dream about.

This work was performed at the Jet Propulsion Laboratory, California Institute of Technology under contract to the National Aeronautics and Space Administration. Government sponsorship is also acknowledged.